ns-3 Manual¶

This is the ns-3 Manual. Primary documentation for the ns-3 project is organized as follows:

- Several guides that are version controlled for each release (the

latest release) and

development tree:

- Tutorial

- Installation Guide

- Manual (this document)

- Model Library

- Contributing Guide

- ns-3 Doxygen: Documentation of the public APIs of the simulator

- ns-3 wiki

This document is written in reStructuredText for Sphinx and is maintained in the

doc/manual directory of ns-3’s source code. Source file column width is 100 columns.

1. Organization¶

This chapter describes the overall ns-3 software organization and the corresponding organization of this manual.

ns-3 is a discrete-event network simulator in which the simulation core and models are implemented in C++. ns-3 is built as a library which may be statically or dynamically linked to a C++ main program that defines the simulation topology and starts the simulator. ns-3 also exports nearly all of its API to Python, allowing Python programs to import an “ns3” module in much the same way as the ns-3 library is linked by executables in C++.

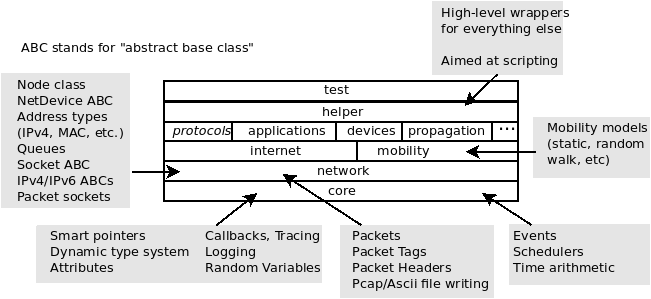

Software organization of ns-3

The source code for ns-3 is mostly organized in the src directory and

can be described by the diagram in Software organization of ns-3. We will

work our way from the bottom up; in general, modules only have dependencies

on modules beneath them in the figure.

We first describe the core of the simulator; those components that are

common across all protocol, hardware, and environmental models.

The simulation core is implemented in src/core. Packets are

fundamental objects in a network simulator

and are implemented in src/network. These two simulation modules by

themselves are intended to comprise a generic simulation core that can be

used by different kinds of networks, not just Internet-based networks. The

above modules of ns-3 are independent of specific network and device

models, which are covered in subsequent parts of this manual.

In addition to the above ns-3 core, we introduce, also in the initial portion of the manual, two other modules that supplement the core C++-based API. ns-3 programs may access all of the API directly or may make use of a so-called helper API that provides convenient wrappers or encapsulation of low-level API calls. The fact that ns-3 programs can be written to two APIs (or a combination thereof) is a fundamental aspect of the simulator. We also describe how Python is supported in ns-3 before moving onto specific models of relevance to network simulation.

The remainder of the manual is focused on documenting the models and

supporting capabilities. The next part focuses on two fundamental objects in

ns-3: the Node and NetDevice. Two special NetDevice types are

designed to support network emulation use cases, and emulation is described

next. The following chapter is devoted to Internet-related models,

including the

sockets API used by Internet applications. The next chapter covers

applications, and the following chapter describes additional support for

simulation, such as animators and statistics.

The project maintains a manual section devoted to testing and validation of ns-3 code (see the tests section in the ns-3 manual).

2. Simulator¶

This chapter explains some of the core ns-3 simulator concepts.

2.1. Events and Simulator¶

ns-3 is a discrete-event network simulator. Conceptually, the simulator keeps track of a number of events that are scheduled to execute at a specified simulation time. The job of the simulator is to execute the events in sequential time order. Once the completion of an event occurs, the simulator will move to the next event (or will exit if there are no more events in the event queue). If, for example, an event scheduled for simulation time “100 seconds” is executed, and the next event is not scheduled until “200 seconds”, the simulator will immediately jump from 100 seconds to 200 seconds (of simulation time) to execute the next event. This is what is meant by “discrete-event” simulator.

To make this all happen, the simulator needs a few things:

- a simulator object that can access an event queue where events are stored and that can manage the execution of events

- a scheduler responsible for inserting and removing events from the queue

- a way to represent simulation time

- the events themselves

This chapter of the manual describes these fundamental objects (simulator, scheduler, time, event) and how they are used.

2.1.1. Event¶

An event represents something that changes the simulation status, i.e., between two events the simulation status does not change, and the event will likely change it (it could also not change anything).

Note that another way to understand an event is to consider it as a delayed function call. With the due differences, a discrete event simulation is not much different from a “normal” program where the functions are not called immediately, but are marked with a “time”, and the time is used to decide the order of the functions execution.

The time, of course, is a simulated time, and is quite different from the “real” time. Depending on the simulation complexity the simulated time can advance faster or slower then the “real” time, but like a “real” time can only go forward.

An example of an event is the reception of a packet, or the expiration of a timer.

An event is represented by:

- The time at which the event will happen

- A pointer to the function that will “handle” the event,

- The parameters of the function that will handle the event (if any),

- Other internal structures.

An event is scheduled through a call to Simulator::Schedule, and once

scheduled, it can be canceled or removed.

Removal implies removal from the scheduler data structure, while cancel

keeps them in the data structure but sets a boolean flag that suppresses

calling the bound event function at the scheduled time. When an event is

scheduled by the Simulator, an EventId is returned. The client may use

this event ID to later cancel or remove the event; see the example program

src/core/examples/sample-simulator.{cc,py} for example usage.

Cancelling an event is typically less computationally expensive than

removing it, but cancelled events consumes more memory in the scheduler

data structure, which might impact its performances.

Events are stored by the simulator in a scheduler data structure. Events are handled in increasing order of simulator time, and in the case of two events with the same scheduled time, the event with the lowest unique ID (a monotonically increasing counter) will be handled first. In other words tied events are handled in FIFO order.

Note that concurrent events (events that happen at the very same time) are unlikely in a real system - not to say impossible. In ns-3 concurrent events are common for a number of reasons, one of them being the time representation. While developing a model this must be carefully taken into account.

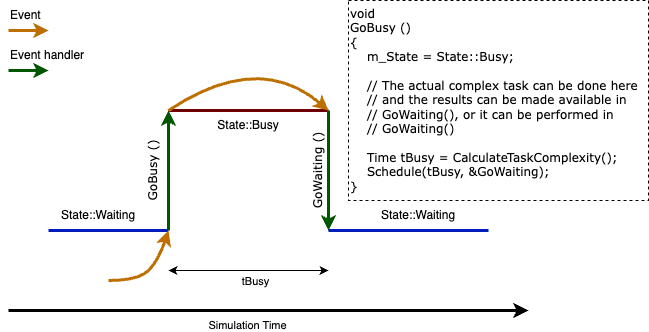

During the event execution, the simulation time will not advance, i.e., each event is executed in zero time. This is a common assumption in discrete event simulations, and holds when the computational complexity of the operations executed in the event is negligible. When this assumption does not hold, it is necessary to schedule a second event to mimic the end of the computationally intensive task.

As an example, suppose to have a device that receives a packet and has to perform a complex analysis on it (e.g., an image processing task). The sequence of events will be:

- T(t) - Packet reception and processing, save the result somewhere, and schedule an event in (t+d) marking the end of the data processing.

- T(t+d) - Retrieve the data, and do other stuff based them.

So, even if the data processing actually did return a result in the execution of the first event, the data is considered valid only after the second event.

The image below can be useful to clarify the idea.

2.1.2. Simulator¶

The Simulator class is the public entry point to access event scheduling

facilities. Once a couple of events have been scheduled to start the

simulation, the user can start to execute them by entering the simulator

main loop (call Simulator::Run). Once the main loop starts running, it

will sequentially execute all scheduled events in order from oldest to

most recent until there are either no more events left in the event

queue or Simulator::Stop has been called.

To schedule events for execution by the simulator main loop, the Simulator class provides the Simulator::Schedule* family of functions.

- Handling event handlers with different signatures

These functions are declared and implemented as C++ templates to handle automatically the wide variety of C++ event handler signatures used in the wild. For example, to schedule an event to execute 10 seconds in the future, and invoke a C++ method or function with specific arguments, you might write this:

void handler(int arg0, int arg1)

{

std::cout << "handler called with argument arg0=" << arg0 << " and

arg1=" << arg1 << std::endl;

}

Simulator::Schedule(Seconds(10), &handler, 10, 5);

Which will output:

handler called with argument arg0=10 and arg1=5

Of course, these C++ templates can also handle transparently member methods on C++ objects:

To be completed: member method example

Notes:

- the ns-3 Schedule methods recognize automatically functions and methods only if they take less than 5 arguments. If you need them to support more arguments, please, file a bug report.

- Readers familiar with the term ‘fully-bound functors’ will recognize the Simulator::Schedule methods as a way to automatically construct such objects.

- Common scheduling operations

The Simulator API was designed to make it really simple to schedule most events. It provides three variants to do so (ordered from most commonly used to least commonly used):

- Schedule methods which allow you to schedule an event in the future by providing the delay between the current simulation time and the expiration date of the target event.

- ScheduleNow methods which allow you to schedule an event for the current simulation time: they will execute _after_ the current event is finished executing but _before_ the simulation time is changed for the next event.

- ScheduleDestroy methods which allow you to hook in the shutdown process of the Simulator to cleanup simulation resources: every ‘destroy’ event is executed when the user calls the Simulator::Destroy method.

- Maintaining the simulation context

There are two basic ways to schedule events, with and without context. What does this mean?

Simulator::Schedule(Time const &time, MEM mem_ptr, OBJ obj);

vs.

Simulator::ScheduleWithContext(uint32_t context, Time const &time, MEM mem_ptr, OBJ obj);

Readers who invest time and effort in developing or using a non-trivial simulation model will know the value of the ns-3 logging framework to debug simple and complex simulations alike. One of the important features that is provided by this logging framework is the automatic display of the network node id associated with the ‘currently’ running event.

The node id of the currently executing network node is in fact tracked by the Simulator class. It can be accessed with the Simulator::GetContext method which returns the ‘context’ (a 32-bit integer) associated and stored in the currently-executing event. In some rare cases, when an event is not associated with a specific network node, its ‘context’ is set to 0xffffffff.

To associate a context to each event, the Schedule, and ScheduleNow methods automatically reuse the context of the currently-executing event as the context of the event scheduled for execution later.

In some cases, most notably when simulating the transmission of a packet from a node to another, this behavior is undesirable since the expected context of the reception event is that of the receiving node, not the sending node. To avoid this problem, the Simulator class provides a specific schedule method: ScheduleWithContext which allows one to provide explicitly the node id of the receiving node associated with the receive event.

XXX: code example

In some very rare cases, developers might need to modify or understand how the context (node id) of the first event is set to that of its associated node. This is accomplished by the NodeList class: whenever a new node is created, the NodeList class uses ScheduleWithContext to schedule a ‘initialize’ event for this node. The ‘initialize’ event thus executes with a context set to that of the node id and can use the normal variety of Schedule methods. It invokes the Node::Initialize method which propagates the ‘initialize’ event by calling the DoInitialize method for each object associated with the node. The DoInitialize method overridden in some of these objects (most notably in the Application base class) will schedule some events (most notably Application::StartApplication) which will in turn scheduling traffic generation events which will in turn schedule network-level events.

Notes:

- Users need to be careful to propagate DoInitialize methods across objects by calling Initialize explicitly on their member objects

- The context id associated with each ScheduleWithContext method has other uses beyond logging: it is used by an experimental branch of ns-3 to perform parallel simulation on multicore systems using multithreading.

The Simulator::* functions do not know what the context is: they merely make sure that whatever context you specify with ScheduleWithContext is available when the corresponding event executes with ::GetContext.

It is up to the models implemented on top of Simulator::* to interpret the context value. In ns-3, the network models interpret the context as the node id of the node which generated an event. This is why it is important to call ScheduleWithContext in ns3::Channel subclasses because we are generating an event from node i to node j and we want to make sure that the event which will run on node j has the right context.

2.1.2.1. Available Simulator Engines¶

ns-3 supplies two different types of basic simulator engine to manage event execution. These are derived from the abstract base class SimulatorImpl:

- DefaultSimulatorImpl This is a classic sequential discrete event simulator engine which uses a single thread of execution. This engine executes events as fast as possible.

- DistributedSimulatorImpl This is a classic YAWNS distributed (“parallel”) simulator engine. By labeling and instantiating your model components appropriately this engine will execute the model in parallel across many compute processes, yet in a time-synchronized way, as if the model had executed sequentially. The two advantages are to execute models faster and to execute models too large to fit in one compute node. This engine also attempts to execute as fast as possible.

- NullMessageSimulatorImpl This implements a variant of the Chandy- Misra-Bryant (CMB) null message algorithm for parallel simulation. Like DistributedSimulatorImpl this requires appropriate labeling and instantiation of model components. This engine attempts to execute events as fast as possible.

You can choose which simulator engine to use by setting a global variable, for example:

GlobalValue::Bind("SimulatorImplementationType",

StringValue("ns3::DistributedSimulatorImpl"));

or by using a command line argument

$ ./ns3 run "... --SimulatorImplementationType=ns3::DistributedSimulatorImpl"

In addition to the basic simulator engines there is a general facility used to build “adapters” which provide small behavior modifications to one of the core SimulatorImpl engines. The adapter base class is SimulatorAdapter, itself derived from SimulatorImpl. SimulatorAdapter uses the PIMPL (pointer to implementation) idiom to forward all calls to the configured base simulator engine. This makes it easy to provide small customizations just by overriding the specific Simulator calls needed, and allowing SimulatorAdapter to handle the rest.

There are few places where adapters are used currently:

- RealtimeSimulatorImpl This adapter attempts to execute in real time by pacing the wall clock evolution. This pacing is “best effort”, meaning actual event execution may not occur exactly in sync, but close to it. This engine is normally only used with the DefaultSimulatorImpl, but it can be used to keep a distributed simulation synchronized with real time. See the RealTime chapter.

- VisualSimulatorImpl This adapter starts a live visualization of the running simulation, showing the network graph and each packet traversing the links.

- LocalTimeSimulatorImpl This adapter enables attaching noisy local clocks to Nodes, then scheduling events with respect to the local noisy clock, instead of relative to the true simulator time.

In addition to the PIMPL idiom of SimulatorAdapter there is a special per-event customization hook:

SimulatorImpl::PreEventHook( const EventId & id)

One can use this to perform any housekeeping actions before the next event actually executes.

The distinction between a core engine and an adapter is the following: there can only ever be one core engine running, while there can be several adapters chained up each providing a variation on the base engine execution. For example one can use noisy local clocks with the real time adapter.

A single adapter can be added on top of the DefaultSimulatorImpl by the same two methods above: binding the “SimulatorImplementationType” global value or using the command line argument. To chain multiple adapters a different approach must be used; see the SimulatorAdapter::AddAdapter() API documentation.

The simulator engine type can be set once, but must be set before the first call to the Simulator() API. In practice, since some models have to schedule their start up events when they are constructed, this means generally you should set the engine type before instantiating any other model components.

The engine type can be changed after Simulator::Destroy() but before any additional calls to the Simulator API, for instance when executing multiple runs in a single ns-3 invocation.

2.1.3. Time¶

ns-3 internally represents simulation times and durations as 64-bit signed integers (with the sign bit used for negative durations). The time values are interpreted with respect to a “resolution” unit in the customary SI units: fs, ps, ns, us, ms, s, min, h, d, y. The unit defines the minimum Time value. It can be changed once before any calls to Simulator::Run(). It is not stored with the 64-bit time value itself.

Times can be constructed from all standard numeric types (using the configured default unit) or with explicit units (as in Time MicroSeconds (uint64_t value)). Times can be compared, tested for sign or equality to zero, rounded to a given unit, converted to standard numeric types in specific units. All basic arithmetic operations are supported (addition, subtraction, multiplication or division by a scalar (numeric value)). Times can be written to/read from IO streams. In the case of writing it is easy to choose the output unit, different from the resolution unit.

2.1.4. Scheduler¶

The main job of the Scheduler classes is to maintain the priority queue of future events. The scheduler can be set with a global variable, similar to choosing the SimulatorImpl:

GlobalValue::Bind("SchedulerType",

StringValue("ns3::DistributedSimulatorImpl"));

The scheduler can be changed at any time via Simulator::SetScheduler(). The default scheduler is MapScheduler which uses a std::map<> to store events in time order.

Because event distributions vary by model there is no one best strategy for the priority queue, so ns-3 has several options with differing tradeoffs. The example utils/bench-scheduler.c can be used to test the performance for a user-supplied event distribution. For modest execution times (less than an hour, say) the choice of priority queue is usually not significant; configuring the build type to optimized is much more important in reducing execution times.

The available scheduler types, and a summary of their time and space complexity on Insert() and RemoveNext(), are listed in the following table. See the individual Scheduler API pages for details on the complexity of the other API calls.

| Scheduler Type | Complexity | ||||

|---|---|---|---|---|---|

| SchedulerImpl Type | Method | Time | Space | ||

| Insert() | RemoveNext() | Overhead | Per Event | ||

| CalendarScheduler | <std::list> [] | Constant | Constant | 24 bytes | 16 bytes |

| HeapScheduler | Heap on std::vector | Logarithmic | Logarithmic | 24 bytes | 0 |

| ListScheduler | std::list | Linear | Constant | 24 bytes | 16 bytes |

| MapScheduler | st::map | Logarithmic | Constant | 40 bytes | 32 bytes |

| PriorityQueueScheduler | std::priority_queue<,std::vector> | Logarithmic | Logarithms | 24 bytes | 0 |

2.2. Callbacks¶

Some new users to ns-3 are unfamiliar with an extensively used programming idiom used throughout the code: the ns-3 callback. This chapter provides some motivation on the callback, guidance on how to use it, and details on its implementation.

2.2.1. Callbacks Motivation¶

Consider that you have two simulation models A and B, and you wish to have them pass information between them during the simulation. One way that you can do that is that you can make A and B each explicitly knowledgeable about the other, so that they can invoke methods on each other:

class A {

public:

void ReceiveInput( /* parameters */ );

...

}

and in another source file:

class B {

public:

void DoSomething();

...

private:

A* a_instance; // pointer to an A

}

void

B::DoSomething()

{

// Tell a_instance that something happened

a_instance->ReceiveInput( /* parameters */ );

...

}

This certainly works, but it has the drawback that it introduces a dependency on

A and B to know about the other at compile time (this makes it harder to have

independent compilation units in the simulator) and is not generalized; if in a

later usage scenario, B needs to talk to a completely different C object, the

source code for B needs to be changed to add a c_instance and so forth. It

is easy to see that this is a brute force mechanism of communication that can

lead to programming cruft in the models.

This is not to say that objects should not know about one another if there is a hard dependency between them, but that often the model can be made more flexible if its interactions are less constrained at compile time.

This is not an abstract problem for network simulation research, but rather it has been a source of problems in previous simulators, when researchers want to extend or modify the system to do different things (as they are apt to do in research). Consider, for example, a user who wants to add an IPsec security protocol sublayer between TCP and IP:

If the simulator has made assumptions, and hard coded into the code, that IP always talks to a transport protocol above, the user may be forced to hack the system to get the desired interconnections. This is clearly not an optimal way to design a generic simulator.

2.2.2. Callbacks Background¶

Note

Readers familiar with programming callbacks may skip this tutorial section.

The basic mechanism that allows one to address the problem above is known as a callback. The ultimate goal is to allow one piece of code to call a function (or method in C++) without any specific inter-module dependency.

This ultimately means you need some kind of indirection – you treat the address of the called function as a variable. This variable is called a pointer-to-function variable. The relationship between function and pointer-to-function pointer is really no different that that of object and pointer-to-object.

In C the canonical example of a pointer-to-function is a pointer-to-function-returning-integer (PFI). For a PFI taking one int parameter, this could be declared like,:

int (*pfi)(int arg) = 0;

What you get from this is a variable named simply pfi that is initialized to

the value 0. If you want to initialize this pointer to something meaningful, you

have to have a function with a matching signature. In this case:

int MyFunction(int arg) {}

If you have this target, you can initialize the variable to point to your function like:

pfi = MyFunction;

You can then call MyFunction indirectly using the more suggestive form of the call:

int result = (*pfi)(1234);

This is suggestive since it looks like you are dereferencing the function pointer just like you would dereference any pointer. Typically, however, people take advantage of the fact that the compiler knows what is going on and will just use a shorter form:

int result = pfi(1234);

Notice that the function pointer obeys value semantics, so you can pass it around like any other value. Typically, when you use an asynchronous interface you will pass some entity like this to a function which will perform an action and call back to let you know it completed. It calls back by following the indirection and executing the provided function.

In C++ you have the added complexity of objects. The analogy with the PFI above means you have a pointer to a member function returning an int (PMI) instead of the pointer to function returning an int (PFI).

The declaration of the variable providing the indirection looks only slightly different:

int (MyClass::*pmi)(int arg) = 0;

This declares a variable named pmi just as the previous example declared a

variable named pfi. Since the will be to call a method of an instance of a

particular class, one must declare that method in a class:

class MyClass {

public:

int MyMethod(int arg);

};

Given this class declaration, one would then initialize that variable like this:

pmi = &MyClass::MyMethod;

This assigns the address of the code implementing the method to the variable,

completing the indirection. In order to call a method, the code needs a this

pointer. This, in turn, means there must be an object of MyClass to refer to. A

simplistic example of this is just calling a method indirectly (think virtual

function):

int (MyClass::*pmi)(int arg) = 0; // Declare a PMI

pmi = &MyClass::MyMethod; // Point at the implementation code

MyClass myClass; // Need an instance of the class

(myClass.*pmi)(1234); // Call the method with an object ptr

Just like in the C example, you can use this in an asynchronous call to another module which will call back using a method and an object pointer. The straightforward extension one might consider is to pass a pointer to the object and the PMI variable. The module would just do:

(*objectPtr.*pmi)(1234);

to execute the callback on the desired object.

One might ask at this time, what’s the point? The called module will have to

understand the concrete type of the calling object in order to properly make the

callback. Why not just accept this, pass the correctly typed object pointer and

do object->Method(1234) in the code instead of the callback? This is

precisely the problem described above. What is needed is a way to decouple the

calling function from the called class completely. This requirement led to the

development of the Functor.

A functor is the outgrowth of something invented in the 1960s called a closure. It is basically just a packaged-up function call, possibly with some state.

A functor has two parts, a specific part and a generic part, related through

inheritance. The calling code (the code that executes the callback) will execute

a generic overloaded operator() of a generic functor to cause the callback

to be called. The called code (the code that wants to be called back) will have

to provide a specialized implementation of the operator() that performs the

class-specific work that caused the close-coupling problem above.

With the specific functor and its overloaded operator() created, the called

code then gives the specialized code to the module that will execute the

callback (the calling code).

The calling code will take a generic functor as a parameter, so an implicit cast is done in the function call to convert the specific functor to a generic functor. This means that the calling module just needs to understand the generic functor type. It is decoupled from the calling code completely.

The information one needs to make a specific functor is the object pointer and the pointer-to-method address.

The essence of what needs to happen is that the system declares a generic part of the functor:

template <typename T>

class Functor

{

public:

virtual int operator()(T arg) = 0;

};

The caller defines a specific part of the functor that really is just there to

implement the specific operator() method:

template <typename T, typename ARG>

class SpecificFunctor : public Functor<ARG>

{

public:

SpecificFunctor(T* p, int (T::*_pmi)(ARG arg))

{

m_p = p;

m_pmi = _pmi;

}

virtual int operator()(ARG arg)

{

(*m_p.*m_pmi)(arg);

}

private:

int (T::*m_pmi)(ARG arg);

T* m_p;

};

Here is an example of the usage:

class A

{

public:

A(int a0) : a(a0) {}

int Hello(int b0)

{

std::cout << "Hello from A, a = " << a << " b0 = " << b0 << std::endl;

}

int a;

};

int main()

{

A a(10);

SpecificFunctor<A, int> sf(&a, &A::Hello);

sf(5);

}

Note

The previous code is not real ns-3 code. It is simplistic example code used only to illustrate the concepts involved and to help you understand the system more. Do not expect to find this code anywhere in the ns-3 tree.

Notice that there are two variables defined in the class above. The m_p variable is the object pointer and m_pmi is the variable containing the address of the function to execute.

Notice that when operator() is called, it in turn calls the method provided

with the object pointer using the C++ PMI syntax.

To use this, one could then declare some model code that takes a generic functor as a parameter:

void LibraryFunction(Functor functor);

The code that will talk to the model would build a specific functor and pass it to LibraryFunction:

MyClass myClass;

SpecificFunctor<MyClass, int> functor(&myclass, MyClass::MyMethod);

When LibraryFunction is done, it executes the callback using the

operator() on the generic functor it was passed, and in this particular

case, provides the integer argument:

void

LibraryFunction(Functor functor)

{

// Execute the library function

functor(1234);

}

Notice that LibraryFunction is completely decoupled from the specific

type of the client. The connection is made through the Functor polymorphism.

The Callback API in ns-3 implements object-oriented callbacks using the functor mechanism. This callback API, being based on C++ templates, is type-safe; that is, it performs static type checks to enforce proper signature compatibility between callers and callees. It is therefore more type-safe to use than traditional function pointers, but the syntax may look imposing at first. This section is designed to walk you through the Callback system so that you can be comfortable using it in ns-3.

2.2.3. Using the Callback API¶

The Callback API is fairly minimal, providing only two services:

1. callback type declaration: a way to declare a type of callback with a given signature, and,

2. callback instantiation: a way to instantiate a template-generated forwarding callback which can forward any calls to another C++ class member method or C++ function.

This is best observed via walking through an example, based on

samples/main-callback.cc.

2.2.3.1. Using the Callback API with static functions¶

Consider a function:

static double

CbOne(double a, double b)

{

std::cout << "invoke cbOne a=" << a << ", b=" << b << std::endl;

return a;

}

Consider also the following main program snippet:

int main(int argc, char *argv[])

{

// return type: double

// first arg type: double

// second arg type: double

Callback<double, double, double> one;

}

This is an example of a C-style callback – one which does not include or need

a this pointer. The function template Callback is essentially the

declaration of the variable containing the pointer-to-function. In the example

above, we explicitly showed a pointer to a function that returned an integer and

took a single integer as a parameter, The Callback template function is

a generic version of that – it is used to declare the type of a callback.

Note

Readers unfamiliar with C++ templates may consult http://www.cplusplus.com/doc/tutorial/templates/.

The Callback template requires one mandatory argument (the return type

of the function to be assigned to this callback) and up to five optional

arguments, which each specify the type of the arguments (if your particular

callback function has more than five arguments, then this can be handled

by extending the callback implementation).

So in the above example, we have a declared a callback named “one” that will

eventually hold a function pointer. The signature of the function that it will

hold must return double and must support two double arguments. If one tries

to pass a function whose signature does not match the declared callback,

a compilation error will occur. Also, if one tries to assign to a callback

an incompatible one, compilation will succeed but a run-time

NS_FATAL_ERROR will be raised. The sample program

src/core/examples/main-callback.cc demonstrates both of these error cases

at the end of the main() program.

Now, we need to tie together this callback instance and the actual target function (CbOne). Notice above that CbOne has the same function signature types as the callback– this is important. We can pass in any such properly-typed function to this callback. Let’s look at this more closely:

static double CbOne(double a, double b) {}

^ ^ ^

| | |

| | |

Callback<double, double, double> one;

You can only bind a function to a callback if they have the matching signature. The first template argument is the return type, and the additional template arguments are the types of the arguments of the function signature.

Now, let’s bind our callback “one” to the function that matches its signature:

// build callback instance which points to cbOne function

one = MakeCallback(&CbOne);

This call to MakeCallback is, in essence, creating one of the specialized

functors mentioned above. The variable declared using the Callback

template function is going to be playing the part of the generic functor. The

assignment one = MakeCallback(&CbOne) is the cast that converts the

specialized functor known to the callee to a generic functor known to the caller.

Then, later in the program, if the callback is needed, it can be used as follows:

NS_ASSERT(!one.IsNull());

// invoke cbOne function through callback instance

double retOne;

retOne = one(10.0, 20.0);

The check for IsNull() ensures that the callback is not null – that there

is a function to call behind this callback. Then, one() executes the

generic operator() which is really overloaded with a specific implementation

of operator() and returns the same result as if CbOne() had been

called directly.

2.2.3.2. Using the Callback API with member functions¶

Generally, you will not be calling static functions but instead public member functions of an object. In this case, an extra argument is needed to the MakeCallback function, to tell the system on which object the function should be invoked. Consider this example, also from main-callback.cc:

class MyCb {

public:

int CbTwo(double a) {

std::cout << "invoke cbTwo a=" << a << std::endl;

return -5;

}

};

int main()

{

...

// return type: int

// first arg type: double

Callback<int, double> two;

MyCb cb;

// build callback instance which points to MyCb::cbTwo

two = MakeCallback(&MyCb::CbTwo, &cb);

...

}

Here, we pass an additional object pointer to the MakeCallback<> function.

Recall from the background section above that Operator() will use the pointer to

member syntax when it executes on an object:

virtual int operator()(ARG arg)

{

(*m_p.*m_pmi)(arg);

}

And so we needed to provide the two variables (m_p and m_pmi) when

we made the specific functor. The line:

two = MakeCallback(&MyCb::CbTwo, &cb);

does precisely that. In this case, when two() is invoked:

int result = two(1.0);

will result in a call to the CbTwo member function (method) on the object

pointed to by &cb.

2.2.3.3. Building Null Callbacks¶

It is possible for callbacks to be null; hence it may be wise to

check before using them. There is a special construct for a null

callback, which is preferable to simply passing “0” as an argument;

it is the MakeNullCallback<> construct:

two = MakeNullCallback<int, double>();

NS_ASSERT(two.IsNull());

Invoking a null callback is just like invoking a null function pointer: it will crash at runtime.

2.2.4. Bound Callbacks¶

A very useful extension to the functor concept is that of a Bound Callback.

Previously it was mentioned that closures were originally function calls

packaged up for later execution. Notice that in all of the Callback

descriptions above, there is no way to package up any parameters for use

later – when the Callback is called via operator(). All of

the parameters are provided by the calling function.

What if it is desired to allow the client function (the one that provides the

callback) to provide some of the parameters?

Alexandrescu

calls the process of allowing a client to specify one of the parameters “binding”.

One of the parameters of operator() has been bound (fixed) by the client.

Some of our pcap tracing code provides a nice example of this. There is a function that needs to be called whenever a packet is received. This function calls an object that actually writes the packet to disk in the pcap file format. The signature of one of these functions will be:

static void DefaultSink(Ptr<PcapFileWrapper> file, Ptr<const Packet> p);

The static keyword means this is a static function which does not need a

this pointer, so it will be using C-style callbacks. We don’t want the

calling code to have to know about anything but the Packet. What we want in

the calling code is just a call that looks like:

m_promiscSnifferTrace(m_currentPkt);

What we want to do is to bind the Ptr<PcapFileWriter> file to the

specific callback implementation when it is created and arrange for the

operator() of the Callback to provide that parameter for free.

We provide the MakeBoundCallback template function for that purpose. It

takes the same parameters as the MakeCallback template function but also

takes the parameters to be bound. In the case of the example above:

MakeBoundCallback(&DefaultSink, file);

will create a specific callback implementation that knows to add in the extra bound arguments. Conceptually, it extends the specific functor described above with one or more bound arguments:

template <typename T, typename ARG, typename BOUND_ARG>

class SpecificFunctor : public Functor

{

public:

SpecificFunctor(T* p, int (T::*_pmi)(ARG arg), BOUND_ARG boundArg)

{

m_p = p;

m_pmi = pmi;

m_boundArg = boundArg;

}

virtual int operator()(ARG arg)

{

(*m_p.*m_pmi)(m_boundArg, arg);

}

private:

void (T::*m_pmi)(ARG arg);

T* m_p;

BOUND_ARG m_boundArg;

};

You can see that when the specific functor is created, the bound argument is saved

in the functor / callback object itself. When the operator() is invoked with

the single parameter, as in:

m_promiscSnifferTrace(m_currentPkt);

the implementation of operator() adds the bound parameter into the actual

function call:

(*m_p.*m_pmi)(m_boundArg, arg);

It’s possible to bind two or three arguments as well. Say we have a function with signature:

static void NotifyEvent(Ptr<A> a, Ptr<B> b, MyEventType e);

One can create bound callback binding first two arguments like:

MakeBoundCallback(&NotifyEvent, a1, b1);

assuming a1 and b1 are objects of type A and B respectively. Similarly for three arguments one would have function with a signature:

static void NotifyEvent(Ptr<A> a, Ptr<B> b, MyEventType e);

Binding three arguments in done with:

MakeBoundCallback(&NotifyEvent, a1, b1, c1);

again assuming a1, b1 and c1 are objects of type A, B and C respectively.

This kind of binding can be used for exchanging information between objects in simulation; specifically, bound callbacks can be used as traced callbacks, which will be described in the next section.

2.2.5. Traced Callbacks¶

Placeholder subsection

2.2.6. Callback locations in ns-3¶

Where are callbacks frequently used in ns-3? Here are some of the more visible ones to typical users:

- Socket API

- Layer-2/Layer-3 API

- Tracing subsystem

- API between IP and routing subsystems

2.2.7. Implementation details¶

The code snippets above are simplistic and only designed to illustrate the mechanism itself. The actual Callback code is quite complicated and very template-intense and a deep understanding of the code is not required. If interested, expert users may find the following useful.

The code was originally written based on the techniques described in http://www.codeproject.com/cpp/TTLFunction.asp. It was subsequently rewritten to follow the architecture outlined in Modern C++ Design, Generic Programming and Design Patterns Applied, Alexandrescu, chapter 5, Generalized Functors.

This code uses:

- default template parameters to saves users from having to specify empty parameters when the number of parameters is smaller than the maximum supported number

- the pimpl idiom: the Callback class is passed around by value and delegates the crux of the work to its pimpl pointer.

- two pimpl implementations which derive from CallbackImpl FunctorCallbackImpl can be used with any functor-type while MemPtrCallbackImpl can be used with pointers to member functions.

- a reference list implementation to implement the Callback’s value semantics.

This code most notably departs from the Alexandrescu implementation in that it does not use type lists to specify and pass around the types of the callback arguments. Of course, it also does not use copy-destruction semantics and relies on a reference list rather than autoPtr to hold the pointer.

2.3. Object model¶

ns-3 is fundamentally a C++ object system. Objects can be declared and instantiated as usual, per C++ rules. ns-3 also adds some features to traditional C++ objects, as described below, to provide greater functionality and features. This manual chapter is intended to introduce the reader to the ns-3 object model.

This section describes the C++ class design for ns-3 objects. In brief, several design patterns in use include classic object-oriented design (polymorphic interfaces and implementations), separation of interface and implementation, the non-virtual public interface design pattern, an object aggregation facility, and reference counting for memory management. Those familiar with component models such as COM or Bonobo will recognize elements of the design in the ns-3 object aggregation model, although the ns-3 design is not strictly in accordance with either.

2.3.1. Object-oriented behavior¶

C++ objects, in general, provide common object-oriented capabilities (abstraction, encapsulation, inheritance, and polymorphism) that are part of classic object-oriented design. ns-3 objects make use of these properties; for instance:

class Address

{

public:

Address();

Address(uint8_t type, const uint8_t *buffer, uint8_t len);

Address(const Address & address);

Address &operator=(const Address &address);

...

private:

uint8_t m_type;

uint8_t m_len;

...

};

2.3.2. Object base classes¶

There are three special base classes used in ns-3. Classes that inherit from these base classes can instantiate objects with special properties. These base classes are:

- class

Object - class

ObjectBase - class

SimpleRefCount

It is not required that ns-3 objects inherit from these class, but

those that do get special properties. Classes deriving from

class Object get the following properties.

- the ns-3 type and attribute system (see Configuration and Attributes)

- an object aggregation system

- a smart-pointer reference counting system (class Ptr)

Classes that derive from class ObjectBase get the first two

properties above, but do not get smart pointers. Classes that derive from class

SimpleRefCount: get only the smart-pointer reference counting

system.

In practice, class Object is the variant of the three above that

the ns-3 developer will most commonly encounter.

2.3.3. Memory management and class Ptr¶

Memory management in a C++ program is a complex process, and is often done incorrectly or inconsistently. We have settled on a reference counting design described as follows.

All objects using reference counting maintain an internal reference count to

determine when an object can safely delete itself. Each time that a pointer is

obtained to an interface, the object’s reference count is incremented by calling

Ref(). It is the obligation of the user of the pointer to explicitly

Unref() the pointer when done. When the reference count falls to zero, the

object is deleted.

- When the client code obtains a pointer from the object itself through object creation, or via GetObject, it does not have to increment the reference count.

- When client code obtains a pointer from another source (e.g., copying a

pointer) it must call

Ref()to increment the reference count. - All users of the object pointer must call

Unref()to release the reference.

The burden for calling Unref() is somewhat relieved by the use of

the reference counting smart pointer class described below.

Users using a low-level API who wish to explicitly allocate non-reference-counted objects on the heap, using operator new, are responsible for deleting such objects.

2.3.3.1. Reference counting smart pointer (Ptr)¶

Calling Ref() and Unref() all the time would be cumbersome, so ns-3

provides a smart pointer class Ptr similar to

Boost::intrusive_ptr. This smart-pointer class assumes that the

underlying type provides a pair of Ref and Unref methods that are

expected to increment and decrement the internal refcount of the object

instance.

This implementation allows you to manipulate the smart pointer as if it was a normal pointer: you can compare it with zero, compare it against other pointers, assign zero to it, etc.

It is possible to extract the raw pointer from this smart pointer with the

GetPointer() and PeekPointer() methods.

If you want to store a newed object into a smart pointer, we recommend you to use the CreateObject template functions to create the object and store it in a smart pointer to avoid memory leaks. These functions are really small convenience functions and their goal is just to save you a small bit of typing.

2.3.4. CreateObject and Create¶

Objects in C++ may be statically, dynamically, or automatically created. This holds true for ns-3 also, but some objects in the system have some additional frameworks available. Specifically, reference counted objects are usually allocated using a templated Create or CreateObject method, as follows.

For objects deriving from class Object:

Ptr<WifiNetDevice> device = CreateObject<WifiNetDevice>();

Please do not create such objects using operator new; create them using

CreateObject() instead.

For objects deriving from class SimpleRefCount, or other objects

that support usage of the smart pointer class, a templated helper function is

available and recommended to be used:

Ptr<B> b = Create<B>();

This is simply a wrapper around operator new that correctly handles the reference counting system.

In summary, use Create<B> if B is not an object but just uses reference

counting (e.g. Packet), and use CreateObject<B> if B derives

from ns3::Object.

2.3.5. Aggregation¶

The ns-3 object aggregation system is motivated in strong part by a recognition that a common use case for ns-2 has been the use of inheritance and polymorphism to extend protocol models. For instance, specialized versions of TCP such as RenoTcpAgent derive from (and override functions from) class TcpAgent.

However, two problems that have arisen in the ns-2 model are downcasts and “weak base class.” Downcasting refers to the procedure of using a base class pointer to an object and querying it at run time to find out type information, used to explicitly cast the pointer to a subclass pointer so that the subclass API can be used. Weak base class refers to the problems that arise when a class cannot be effectively reused (derived from) because it lacks necessary functionality, leading the developer to have to modify the base class and causing proliferation of base class API calls, some of which may not be semantically correct for all subclasses.

ns-3 is using a version of the query interface design pattern to avoid these problems. This design is based on elements of the Component Object Model and GNOME Bonobo although full binary-level compatibility of replaceable components is not supported and we have tried to simplify the syntax and impact on model developers.

2.3.6. Examples¶

2.3.6.1. Aggregation example¶

Node is a good example of the use of aggregation in ns-3. Note

that there are not derived classes of Nodes in ns-3 such as class

InternetNode. Instead, components (protocols) are aggregated to a

node. Let’s look at how some Ipv4 protocols are added to a node.:

static void

AddIpv4Stack(Ptr<Node> node)

{

Ptr<Ipv4L3Protocol> ipv4 = CreateObject<Ipv4L3Protocol>();

ipv4->SetNode(node);

node->AggregateObject(ipv4);

Ptr<Ipv4Impl> ipv4Impl = CreateObject<Ipv4Impl>();

ipv4Impl->SetIpv4(ipv4);

node->AggregateObject(ipv4Impl);

}

Note that the Ipv4 protocols are created using CreateObject().

Then, they are aggregated to the node. In this manner, the Node base class does

not need to be edited to allow users with a base class Node pointer to access

the Ipv4 interface; users may ask the node for a pointer to its Ipv4 interface

at runtime. How the user asks the node is described in the next subsection.

Note that it is a programming error to aggregate more than one object of the

same type to an ns3::Object. So, for instance, aggregation is not

an option for storing all of the active sockets of a node.

2.3.6.2. GetObject example¶

GetObject is a type-safe way to achieve a safe downcasting and to allow interfaces to be found on an object.

Consider a node pointer m_node that points to a Node object that has an

implementation of IPv4 previously aggregated to it. The client code wishes to

configure a default route. To do so, it must access an object within the node

that has an interface to the IP forwarding configuration. It performs the

following:

Ptr<Ipv4> ipv4 = m_node->GetObject<Ipv4>();

If the node in fact does not have an Ipv4 object aggregated to it, then the method will return null. Therefore, it is good practice to check the return value from such a function call. If successful, the user can now use the Ptr to the Ipv4 object that was previously aggregated to the node.

Another example of how one might use aggregation is to add optional models to objects. For instance, an existing Node object may have an “Energy Model” object aggregated to it at run time (without modifying and recompiling the node class). An existing model (such as a wireless net device) can then later “GetObject” for the energy model and act appropriately if the interface has been either built in to the underlying Node object or aggregated to it at run time. However, other nodes need not know anything about energy models.

We hope that this mode of programming will require much less need for developers to modify the base classes.

2.3.7. Object factories¶

A common use case is to create lots of similarly configured objects. One can

repeatedly call CreateObject() but there is also a factory design

pattern in use in the ns-3 system. It is heavily used in the “helper” API.

Class ObjectFactory can be used to instantiate objects and to

configure the attributes on those objects:

void SetTypeId(TypeId tid);

void Set(std::string name, const AttributeValue &value);

Ptr<T> Create() const;

The first method allows one to use the ns-3 TypeId system to specify the type of objects created. The second allows one to set attributes on the objects to be created, and the third allows one to create the objects themselves.

For example:

ObjectFactory factory;

// Make this factory create objects of type FriisPropagationLossModel

factory.SetTypeId("ns3::FriisPropagationLossModel")

// Make this factory object change a default value of an attribute, for

// subsequently created objects

factory.Set("SystemLoss", DoubleValue(2.0));

// Create one such object

Ptr<Object> object = factory.Create();

factory.Set("SystemLoss", DoubleValue(3.0));

// Create another object with a different SystemLoss

Ptr<Object> object = factory.Create();

2.3.8. Downcasting¶

A question that has arisen several times is, “If I have a base class pointer

(Ptr) to an object and I want the derived class pointer, should I downcast (via

C++ dynamic cast) to get the derived pointer, or should I use the object

aggregation system to GetObject<> () to find a Ptr to the interface

to the subclass API?”

The answer to this is that in many situations, both techniques will work. ns-3 provides a templated function for making the syntax of Object dynamic casting much more user friendly:

template <typename T1, typename T2>

Ptr<T1>

DynamicCast(Ptr<T2> const&p)

{

return Ptr<T1>(dynamic_cast<T1 *>(PeekPointer(p)));

}

DynamicCast works when the programmer has a base type pointer and is testing against a subclass pointer. GetObject works when looking for different objects aggregated, but also works with subclasses, in the same way as DynamicCast. If unsure, the programmer should use GetObject, as it works in all cases. If the programmer knows the class hierarchy of the object under consideration, it is more direct to just use DynamicCast.

2.4. Configuration and Attributes¶

In ns-3 simulations, there are two main aspects to configuration:

- The simulation topology and how objects are connected.

- The values used by the models instantiated in the topology.

This chapter focuses on the second item above: how the many values in use in ns-3 are organized, documented, and modifiable by ns-3 users. The ns-3 attribute system is also the underpinning of how traces and statistics are gathered in the simulator.

In the course of this chapter we will discuss the various ways to set or modify the values used by ns-3 model objects. In increasing order of specificity, these are:

| Method | Scope |

|---|---|

Default Attribute values set when

Attributes are defined in

GetTypeId(). |

Affect all instances of the class. |

CommandLine

Config::SetDefault()

ConfigStore |

Affect all future instances. |

ObjectFactory |

Affects all instances created with the factory. |

| Helper methods with (string/ AttributeValue) parameter pairs | Affects all instances created by the helper. |

MyClass::SetX()

Object::SetAttribute()

Config::Set() |

Alters this particular instance. Generally this is the only form which can be scheduled to alter an instance once the simulation is running. |

By “specificity” we mean that methods in later rows in the table override the values set by, and typically affect fewer instances than, earlier methods.

Before delving into details of the attribute value system, it will help to

review some basic properties of class Object.

2.4.1. Object Overview¶

ns-3 is fundamentally a C++ object-based system. By this we mean that new C++ classes (types) can be declared, defined, and subclassed as usual.

Many ns-3 objects inherit from the Object base class. These

objects have some additional properties that we exploit for organizing the

system and improving the memory management of our objects:

- “Metadata” system that links the class name to a lot of meta-information

about the object, including:

- The base class of the subclass,

- The set of accessible constructors in the subclass,

- The set of “attributes” of the subclass,

- Whether each attribute can be set, or is read-only,

- The allowed range of values for each attribute.

- Reference counting smart pointer implementation, for memory management.

ns-3 objects that use the attribute system derive from either

Object or ObjectBase. Most ns-3 objects we

will discuss derive from Object, but a few that are outside

the smart pointer memory management framework derive from

ObjectBase.

Let’s review a couple of properties of these objects.

2.4.1.1. Smart Pointers¶

As introduced in the ns-3 tutorial, ns-3 objects are memory managed by a

reference counting smart pointer implementation, class Ptr.

Smart pointers are used extensively in the ns-3 APIs, to avoid passing references to heap-allocated objects that may cause memory leaks. For most basic usage (syntax), treat a smart pointer like a regular pointer:

Ptr<WifiNetDevice> nd = ...;

nd->CallSomeFunction();

// etc.

So how do you get a smart pointer to an object, as in the first line of this example?

2.4.1.1.1. CreateObject¶

As we discussed above in Memory management and class Ptr, at the

lowest-level API, objects of type Object are not instantiated

using operator new as usual but instead by a templated function called

CreateObject().

A typical way to create such an object is as follows:

Ptr<WifiNetDevice> nd = CreateObject<WifiNetDevice>();

You can think of this as being functionally equivalent to:

WifiNetDevice* nd = new WifiNetDevice();

Objects that derive from Object must be allocated on the heap

using CreateObject(). Those deriving from ObjectBase,

such as ns-3 helper functions and packet headers and trailers,

can be allocated on the stack.

In some scripts, you may not see a lot of CreateObject() calls

in the code; this is because there are some helper objects in effect

that are doing the CreateObject() calls for you.

2.4.1.2. TypeId¶

ns-3 classes that derive from class Object can include

a metadata class called TypeId that records meta-information

about the class, for use in the object aggregation and component manager

systems:

- A unique string identifying the class.

- The base class of the subclass, within the metadata system.

- The set of accessible constructors in the subclass.

- A list of publicly accessible properties (“attributes”) of the class.

2.4.1.3. Object Summary¶

Putting all of these concepts together, let’s look at a specific

example: class Node.

The public header file node.h has a declaration that includes

a static GetTypeId() function call:

class Node : public Object

{

public:

static TypeId GetTypeId();

...

This is defined in the node.cc file as follows:

TypeId

Node::GetTypeId()

{

static TypeId tid = TypeId("ns3::Node")

.SetParent<Object>()

.SetGroupName("Network")

.AddConstructor<Node>()

.AddAttribute("DeviceList",

"The list of devices associated to this Node.",

ObjectVectorValue(),

MakeObjectVectorAccessor(&Node::m_devices),

MakeObjectVectorChecker<NetDevice>())

.AddAttribute("ApplicationList",

"The list of applications associated to this Node.",

ObjectVectorValue(),

MakeObjectVectorAccessor(&Node::m_applications),

MakeObjectVectorChecker<Application>())

.AddAttribute("Id",

"The id(unique integer) of this Node.",

TypeId::ATTR_GET, // allow only getting it.

UintegerValue(0),

MakeUintegerAccessor(&Node::m_id),

MakeUintegerChecker<uint32_t>())

;

return tid;

}

Consider the TypeId of the ns-3 Object class

as an extended form of run time type information (RTTI). The C++ language

includes a simple kind of RTTI in order to support dynamic_cast and

typeid operators.

The SetParent<Object>() call in the definition above is used in

conjunction with our object aggregation mechanisms to allow safe up- and

down-casting in inheritance trees during GetObject().

It also enables subclasses to inherit the Attributes of their parent class.

The AddConstructor<Node>() call is used in conjunction

with our abstract object factory mechanisms to allow us to construct

C++ objects without forcing a user to know the concrete class of

the object she is building.

The three calls to AddAttribute() associate a given string

with a strongly typed value in the class. Notice that you must provide

a help string which may be displayed, for example, via command line

processors. Each Attribute is associated with mechanisms

for accessing the underlying member variable in the object (for example,

MakeUintegerAccessor() tells the generic Attribute

code how to get to the node ID above). There are also “Checker” methods which

are used to validate values against range limitations, such as maximum

and minimum allowed values.

When users want to create Nodes, they will usually call some form of

CreateObject(),:

Ptr<Node> n = CreateObject<Node>();

or more abstractly, using an object factory, you can create a

Node object without even knowing the concrete C++ type:

ObjectFactory factory;

const std::string typeId = "ns3::Node'';

factory.SetTypeId(typeId);

Ptr<Object> node = factory.Create <Object>();

Both of these methods result in fully initialized attributes being available

in the resulting Object instances.

We next discuss how attributes (values associated with member variables or

functions of the class) are plumbed into the above TypeId.

2.4.2. Attributes¶

The goal of the attribute system is to organize the access of internal member objects of a simulation. This goal arises because, typically in simulation, users will cut and paste/modify existing simulation scripts, or will use higher-level simulation constructs, but often will be interested in studying or tracing particular internal variables. For instance, use cases such as:

- “I want to trace the packets on the wireless interface only on the first access point.”

- “I want to trace the value of the TCP congestion window (every time it changes) on a particular TCP socket.”

- “I want a dump of all values that were used in my simulation.”

Similarly, users may want fine-grained access to internal variables in the simulation, or may want to broadly change the initial value used for a particular parameter in all subsequently created objects. Finally, users may wish to know what variables are settable and retrievable in a simulation configuration. This is not just for direct simulation interaction on the command line; consider also a (future) graphical user interface that would like to be able to provide a feature whereby a user might right-click on an node on the canvas and see a hierarchical, organized list of parameters that are settable on the node and its constituent member objects, and help text and default values for each parameter.

2.4.2.1. Available AttributeValue Types¶

AddressValue

AttributeContainerValue

BooleanValue

BoxValue

CallbackValue

DataRateValue

DoubleValue

EmptyAttributeValue

EnumValue

IntegerValue

Ipv4AddressValue

Ipv4MaskValue

Ipv6AddressValue

Ipv6PrefixValue

LengthValue

Mac16AddressValue

Mac48AddressValue

Mac64AddressValue

ObjectFactoryValue

ObjectPtrContainerValue

PairValue<A, B>

PointerValue

PriomapValue

QueueSizeValue

RectangleValue

SsidValue

TimeValue

TupleValue<Args…>

A TupleValue is capable of storing values of different types, hence it is suitable for structured data. A prominent example is the ChannelSettings attribute of WifiPhy, which consists of channel number, channel width, PHY band and primary 20 MHz channel index. In this case the values have to be mutually consistent, which makes it difficult to set them as individual Attributes. Capturing them in a TupleValue simplifies this problem, see

src/wifi/model/wifi-phy.cc.Values stored in a TupleValue object can be set/get through a std::tuple object or can be serialized to/deserialized from a string containing a comma-separated sequence of the values enclosed in a pair of curly braces (e.g., “{36, 20, BAND_5GHZ, 0}”).

The usage of the TupleValue attribute is illustrated in

src/core/test/tuple-value-test-suite.cc.TypeIdValue

UanModesListValue

UintegerValue

Vector2DValue

Vector3DValue

WaypointValue

WifiModeValue

2.4.2.2. Defining Attributes¶

We provide a way for users to access values deep in the system, without having

to plumb accessors (pointers) through the system and walk pointer chains to get

to them. Consider a class QueueBase that has a member variable

m_maxSize controlling the depth of the queue.

If we look at the declaration of QueueBase, we see

the following:

class QueueBase : public Object {

public:

static TypeId GetTypeId();

...

private:

...

QueueSize m_maxSize; //!< max queue size

...

};

QueueSize is a special type in ns-3 that allows size

to be represented in different units:

enum QueueSizeUnit

{

PACKETS, /**< Use number of packets for queue size */

BYTES, /**< Use number of bytes for queue size */

};

class QueueSize

{

...

private:

...

QueueSizeUnit m_unit; //!< unit

uint32_t m_value; //!< queue size [bytes or packets]

};

Finally, the class DropTailQueue inherits from this base

class and provides the semantics that packets that are submitted to

a full queue will be dropped from the back of the queue (“drop tail”).

/**

* \ingroup queue

*

* \brief A FIFO packet queue that drops tail-end packets on overflow

*/

template <typename Item>

class DropTailQueue : public Queue<Item>

Let’s consider things that a user may want to do with the value of

m_maxSize:

- Set a default value for the system, such that whenever a new

DropTailQueueis created, this member is initialized to that default. - Set or get the value on an already instantiated queue.

The above things typically require providing Set() and Get()

functions, and some type of global default value.

In the ns-3 attribute system, these value definitions and accessor function

registrations are moved into the TypeId class; e.g.:

NS_OBJECT_ENSURE_REGISTERED(QueueBase);

TypeId

QueueBase::GetTypeId()

{

static TypeId tid = TypeId("ns3::DropTailQueue")

.SetParent<Queue>()

.SetGroupName("Network")

...

.AddAttribute("MaxSize",

"The max queue size",

QueueSizeValue(QueueSize("100p")),

MakeQueueSizeAccessor(&QueueBase::SetMaxSize,

&QueueBase::GetMaxSize),

MakeQueueSizeChecker())

...

;

return tid;

}

The AddAttribute() method is performing a number of things for the

m_maxSize value:

- Binding the (usually private) member variable

m_maxSizeto a public string"MaxSize". - Providing a default value (0 packets).

- Providing some help text defining the meaning of the value.

- Providing a “Checker” (not used in this example) that can be used to set bounds on the allowable range of values.

The key point is that now the value of this variable and its default value are

accessible in the attribute namespace, which is based on strings such as

"MaxSize" and TypeId name strings. In the next section,

we will provide an example script that shows how users may manipulate

these values.

Note that initialization of the attribute relies on the macro

NS_OBJECT_ENSURE_REGISTERED(QueueBase) being called; if you leave this

out of your new class implementation, your attributes will not be initialized

correctly.

While we have described how to create attributes, we still haven’t described how

to access and manage these values. For instance, there is no globals.h

header file where these are stored; attributes are stored with their classes.

Questions that naturally arise are how do users easily learn about all of the

attributes of their models, and how does a user access these attributes, or

document their values as part of the record of their simulation?

Detailed documentation of the actual attributes defined for a type, and a global list of all defined attributes, are available in the API documentation. For the rest of this document we are going to demonstrate the various ways of getting and setting attribute values.

2.4.2.3. Setting Default Values¶

2.4.2.3.1. Config::SetDefault and CommandLine¶

Let’s look at how a user script might access a specific attribute value.

We’re going to use the

src/point-to-point/examples/main-attribute-value.cc

script for illustration, with some details stripped out. The main

function begins:

// This is a basic example of how to use the attribute system to

// set and get a value in the underlying system; namely, the maximum

// size of the FIFO queue in the PointToPointNetDevice

//

int

main(int argc, char *argv[])

{

// Queues in ns-3 are objects that hold items (other objects) in

// a queue structure. The C++ implementation uses templates to

// allow queues to hold various types of items, but the most

// common is a pointer to a packet(Ptr<Packet>).

//

// The maximum queue size can either be enforced in bytes ('b') or

// packets ('p'). A special type called the ns3::QueueSize can

// hold queue size values in either unit (bytes or packets). The

// queue base class ns3::QueueBase has a MaxSize attribute that can

// be set to a QueueSize.

// By default, the MaxSize attribute has a value of 100 packets ('100p')

// (this default can be observed in the function QueueBase::GetTypeId)

//

// Here, we set it to 80 packets. We could use one of two value types:

// a string-based value or a QueueSizeValue value

Config::SetDefault("ns3::QueueBase::MaxSize", StringValue("80p"));

// The below function call is redundant

Config::SetDefault("ns3::QueueBase::MaxSize", QueueSizeValue(QueueSize(QueueSizeUnit::PACKETS, 80)));

The main thing to notice in the above are the two equivalent calls to

Config::SetDefault(). This is how we set the default value

for all subsequently instantiated DropTailQueues. We illustrate

that two types of Value classes, a StringValue and

a QueueSizeValue class, can be used to assign the value

to the attribute named by “ns3::QueueBase::MaxSize”.

It is also possible to manipulate Attributes using the CommandLine;

we saw some examples early in the ns-3 Tutorial. In particular, it is

straightforward to add a shorthand argument name, such as --maxSize,

for an Attribute that is particular relevant for your model, in this case

"ns3::QueueBase::MaxSize". This has the additional feature that

the help string for the Attribute will be printed as part of the usage

message for the script. For more information see

the CommandLine API documentation.

// Allow the user to override any of the defaults and the above

// SetDefaults() at run-time, via command-line arguments

// For example, via "--ns3::QueueBase::MaxSize=80p"

CommandLine cmd;

// This provides yet another way to set the value from the command line:

cmd.AddValue("maxSize", "ns3::QueueBase::MaxSize");

cmd.Parse(argc, argv);

Now, we will create a few objects using the low-level API. Our

newly created queues will not have m_maxSize initialized to

0 packets, as defined in the QueueBase::GetTypeId()

function, but to 80 packets, because of what we did above with

default values.:

Ptr<Node> n0 = CreateObject<Node>();

Ptr<PointToPointNetDevice> net0 = CreateObject<PointToPointNetDevice>();

n0->AddDevice(net0);

Ptr<Queue<Packet>> q = CreateObject<DropTailQueue<Packet>> ();

net0->AddQueue(q);

At this point, we have created a single Node (n0)

and a single PointToPointNetDevice (net0),

added a DropTailQueue (q) to net0,

which will be configured with a queue size limit of 80 packets.

As a final note, the Config::Set…() functions will throw an error if the targeted Attribute does not exist at the path given. There are also “fail-safe” versions, Config::Set…FailSafe(), if you can’t be sure the Attribute exists. The fail-safe versions return true if at least one instance could be set.

2.4.2.3.2. Constructors, Helpers and ObjectFactory¶

Arbitrary combinations of attributes can be set and fetched from the helper and low-level APIs; either from the constructors themselves:

Ptr<GridPositionAllocator> p =

CreateObjectWithAttributes<GridPositionAllocator>

("MinX", DoubleValue(-100.0),

"MinY", DoubleValue(-100.0),

"DeltaX", DoubleValue(5.0),

"DeltaY", DoubleValue(20.0),

"GridWidth", UintegerValue(20),

"LayoutType", StringValue("RowFirst"));

or from the higher-level helper APIs, such as:

mobility.SetPositionAllocator

("ns3::GridPositionAllocator",

"MinX", DoubleValue(-100.0),

"MinY", DoubleValue(-100.0),

"DeltaX", DoubleValue(5.0),

"DeltaY", DoubleValue(20.0),

"GridWidth", UintegerValue(20),

"LayoutType", StringValue("RowFirst"));

We don’t illustrate it here, but you can also configure an

ObjectFactory with new values for specific attributes.

Instances created by the ObjectFactory will have those

attributes set during construction. This is very similar to using

one of the helper APIs for the class.

To review, there are several ways to set values for attributes for class instances to be created in the future:

Config::SetDefault()CommandLine::AddValue()CreateObjectWithAttributes<>()- Various helper APIs

But what if you’ve already created an instance, and you want

to change the value of the attribute? In this example, how can we

manipulate the m_maxSize value of the already instantiated

DropTailQueue? Here are various ways to do that.

2.4.2.4. Changing Values¶

2.4.2.4.1. SmartPointer¶

Assume that a smart pointer (Ptr) to a relevant network device

is in hand; in the current example, it is the net0 pointer.

One way to change the value is to access a pointer to the underlying queue and modify its attribute.

First, we observe that we can get a pointer to the (base class)

Queue via the

PointToPointNetDevice attributes, where it is called

"TxQueue":

PointerValue ptr;

net0->GetAttribute("TxQueue", ptr);

Ptr<Queue<Packet>> txQueue = ptr.Get<Queue<Packet>>();

Using the GetObject() function, we can perform a safe downcast

to a DropTailQueue. The NS_ASSERT checks that the pointer is

valid.

Ptr<DropTailQueue<Packet>> dtq = txQueue->GetObject<DropTailQueue<Packet>>();

NS_ASSERT (dtq);

Next, we can get the value of an attribute on this queue. We have introduced

wrapper Value classes for the underlying data types, similar

to Java wrappers around these types, since the attribute system stores values

serialized to strings, and not disparate types. Here, the attribute value

method on this value produces the (unwrapped) QueueSize. That is,

is assigned to a QueueSizeValue, and the Get()

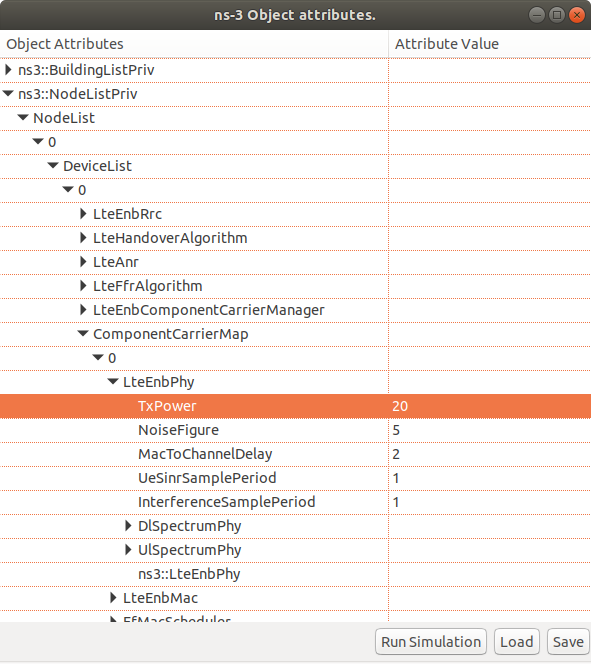

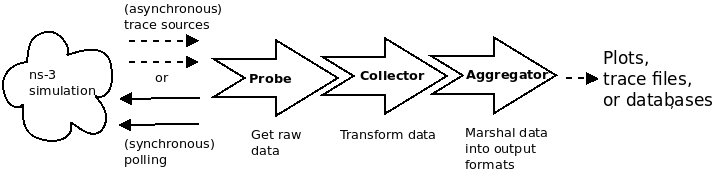

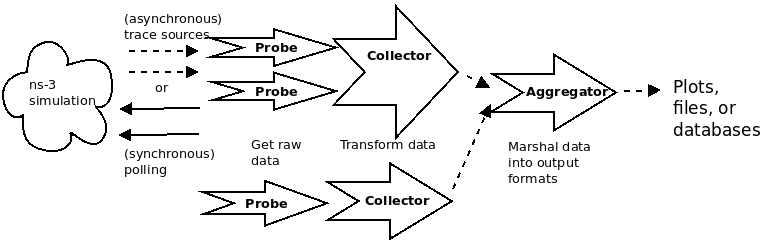

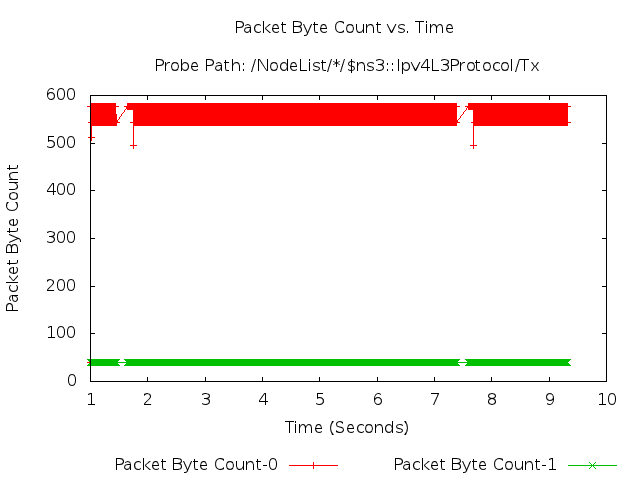

the variable limit is written into by the GetAttribute method.: